Table of Contents

Introduction

Do you know that recurrent neural networks (RNNs) contributed to the first AI-composed pop song? This shows how powerful these deep learning models are. They’ve changed many fields, like natural language processing and speech recognition. In this guide, we’ll explore RNNs, their basics, and how they’re changing AI.

Recurrent neural networks are special deep learning models for handling sequential data. Unlike regular neural networks, RNNs remember and use past information. This lets them make smart choices based on what they’ve learned before.

This guide will teach you how RNNs work, their fundamental concepts, and how they grow. We’ll discuss everything from how they’re built to the most recent advances. You will receive the necessary tools to employ RNNs in your projects.

Unraveling the Power of Recurrent Neural Networks

Recurrent neural networks (RNNs) are specialized artificial neural networks. They excel at organizing sequential data and time dependencies. RNNs, but normal neural networks, can remember and apply previously acquired knowledge, making them suitable for tasks such as language modeling and recognition of speech.

RNNs’ power stems from their capacity to maintain a hidden state. This state is updated with each step, acting as a memory. It enables the network to use previous data to make better decisions about future inputs. This distinguishes RNNs from other neural networks, allowing for new applications in a variety of fields.

RNNs are especially good at sequence modeling and time dependencies. They can spot the important timing in data. This is why they’re so useful in tasks like natural language processing and music generation. They can find complex patterns and insights in sequential data.

As artificial neural networks keep getting better, RNNs will stay very important. They’ve already helped a lot in different fields. And as they keep improving, we can expect even more amazing things from them in the future.

The Fundamental Principles of Recurrent Neural Networks

The concept of recurring connections is fundamental to recurring Neural Networks (RNNs). These connections let the network remember past inputs and use them in the current output. This special setup makes RNNs great at handling sequential data and understanding time relationships. They are very useful in many fields.

Understanding Recurrent Connections

RNNs are different from regular neural networks because they can share information over time. This happens through the recurrent connections. The output of a hidden layer depends on the current input and the previous hidden state. This cycle of information helps RNNs keep a continuous memory. This is key for tasks like understanding natural language and predicting time series.

Sequence Modeling and Time Dependencies

RNNs are really good at solving sequence-to-sequence problems. They can handle both the input and output as sequences. This is because they can spot time relationships through their connections. By looking at data step by step, RNNs can find patterns and make predictions or create new sequences.

Training RNNs to understand time relationships is called backpropagation through time. This method helps the network learn and adjust based on errors. It’s essential for tasks that need to understand long-term sequences.

The core ideas of recurrent neural networks are recurrent connections, sequence modeling, and time dependencies. These principles help RNNs do well in many areas, from understanding language to predicting time series.

Recurrent Neural Networks in Action

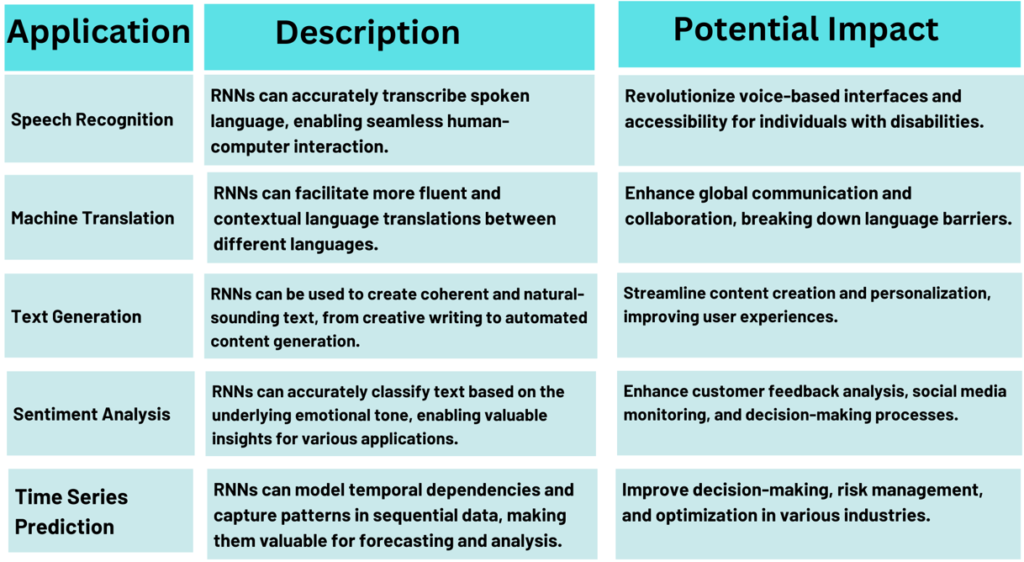

Recurrent neural nets have very important applications in NLP. They are useful in speech recognition and machine translation, text production and sentiment analysis. The sequential information that recurrent neural nets can handle makes a lot of difference in dealing with long-term dependencies in our interactions with language.

Natural Language Processing Marvels

In NLP, RNNs have made big strides. They help in speech recognition, making it easier to understand spoken words. They also excel in machine translation, breaking down language barriers. Plus, they’re great at text generation and sentiment analysis, helping us grasp emotions in text.

Time Series Prediction Unleashed

RNNs are also crucial in time series prediction. They’re good at finding patterns in data over time. This makes them vital in finance, climate, and IoT for accurate forecasting. They help us make better decisions with time-dependent data.

| Application | RNN Capability | Benefit |

| Speech Recognition | Capturing sequential information and long-range dependencies | Accurate transcription of spoken word |

| Machine Translation | Bridging language barriers through sequential modeling | Seamless translation between languages |

| Text Generation | Producing coherent and contextually relevant content | Automated content creation for various applications |

| Sentiment Analysis | Identifying and understanding emotional undertones in text | Insights into customer sentiment and opinions |

| Time Series Prediction | Capturing temporal dependencies and modeling complex patterns | Accurate forecasting and decision-making in dynamic environments |

RNNs keep evolving and finding new uses. They’re vital in shaping the future of NLP and time series prediction. They improve our experiences and help make data-driven decisions, making them essential in AI and machine learning.

Mastering Architectures: LSTM and Beyond

This model does a very important function for most tasks in the subject called deep learning. From these basics, different RNN designs have been found as being improved versions of these to improve their performance significantly over some traditional models. Here a crucial big step forward: that was the LSTM (Long Short-Term Memory(LSTM) ).

This makes the LSTM network applicable in long sequences of data. It differs from simple RNNs since it retains information well with time. Therefore, this makes he Long Short-Term Memory(LSTM) great in tasks such as understanding languages, recognizing audio, and predicting time series.

But, there’s more to RNNs than just LSTMs. New architectures like Gated Recurrent Units (GRUs) have been developed. They aim to be simpler than LSTMs but still powerful. These advancements have opened up new areas in understanding language and predicting time series.

The field of recurrent neural networks is always growing. Researchers keep finding new ways to improve them. By learning these advanced architectures, we can do even more with deep learning in many fields.

Discuss Recurrent Neural Networks and Convolutional Neural Networks

RNNs are best suited to deal with data that appears in a sequence and also happens to be connected over time. For image and video processing, CNN would be the best option. Let’s discuss the most basic differences between these two deep learning models, particularly highlighting their strengths and weaknesses.

Keys to explore differences

Recurrent neural networks are designed for sequences of data, such as text or time series data. They have an internal state and evolve from one time step to the next. This allows RNNs to capture the interrelation and dependencies in the sequence, making them a wonderful fit for language understanding as well as speech recognition applications.

However, Convolutional Neural Networks(CNNs) are spatial information-based structures similar to images or videos and use the application of convolutional and pooling layers to identify the essential feature in the data, whereby CNNs are excellent at most computer vision tasks, including image classification and object detection.

Some very large differences are about what these models do in the information from space and time: RNNs tend to be good for sequence kind of data, such that things depend on the order. In CNNs, as objects are located within some image, it captures understanding spatial relationships.

Training and computer requirements are different. RNNs are relatively harder to train since they look at one piece at a time. Methods that work at once make CNNs easier to train. All of this finally depends upon the problem in hand. RNNs are better suited to tasks requiring sequences, like language modeling, time series prediction. The opposite of this is with CNNs; it will better suit those tasks where spatial relationships are of concern such as in image and video processing.

Recurrent Neural Networks: Warping Time?

RNNs are more than just tools for handling sequential data. They can handle time in unique ways, adapting to different time scales. But can they really “warp” time? Let’s dive into the fascinating world of time manipulation in RNNs.

RNNs are great at tasks like understanding natural language, where timing matters a lot. But can they handle more complex time patterns? The answer is yes, thanks to their flexibility.

At their heart, RNNs have a hidden state that changes over time. This state helps them remember long-term details and understand complex sequences. It’s like an internal memory that lets RNNs adjust to different time scales and even bend time.

To manipulate time, RNNs use time-warping algorithms. These algorithms let the models adjust the time axis to match the data better. This is super useful in speech recognition, where speech speed can change a lot.

With time-warping, RNNs become more powerful and flexible. They can tackle complex time patterns that other models can’t. This opens up new possibilities for RNNs in areas like finance and medicine.

As deep learning grows, so does what RNNs can do. The ability to play with time in these models is an exciting area. It’s a frontier that could lead to big advances in AI and sequence modeling.

Applications Galore: When to Use Recurrent Neural Networks

Recurrent neural networks (RNNs) are a key element of machine learning, or deep learning. They work in a number of industries and sectors. They perform difficult tasks such as speech recognition, machine translation, and text generating sound.

Speech Recognition and Translating Machines

RNNs are excellent at speech recognition. They have a strong understanding of speech patterns, which helps computers understand us better. They also improve machine translation, making language changes simpler and more natural.

Text Generation and Sentiment Analysis

RNNs are good at creating text that sounds natural. They can write stories or make content automatically. They also understand emotions in text, helping us analyze feelings in customer feedback and social media.

RNNs are also good at predicting trends in data. They look for patterns in data over time. This is useful for forecasting and financial analysis.

Transformers: The Next Generation of Recurrent Neural Networks?

In deep learning, recurrent neural networks (RNNs) have been key for tasks like natural language processing. Recently, Transformers have shown up as a strong contender. They use an attention mechanism to outperform RNNs in many NLP tasks, especially in large language models like BERT and GPT.

The attention mechanism in Transformers lets the model focus on important parts of the input. This is different from RNNs, which can find it hard to handle long-range data. Transformers are great at understanding language and context, making them ideal for complex tasks.

Transformers also have a big advantage over RNNs: they can process data in parallel. This makes training and using them much faster. This is crucial for large NLP models that need lots of computing power.

Now, the question is: are Transformers the next step after RNNs? While RNNs have been the standard for years, Transformers are changing the game. It’s possible that we’ll see a mix of both, making even more powerful models for many tasks.

The battle between RNNs and Transformers shows how fast deep learning is evolving. By knowing what each architecture does well, we can choose the best one for our needs. This helps us solve complex problems in deep learning.

Transformers: the next generation of recurrent neural networks?

Recurrent neural networks (RNNs) have served a crucial part in applications involving deep learning which include Natural language Processing. The Transformers were recently recognized as a significant challenger. They use a focus technique to beat RNNs for different NLP tasks, particularly large language models that include BERT and GPT.

The attention mechanism in Transformers lets the model focus on important parts of the input. This is different from RNNs, which can find it hard to handle long-range data. Transformers are great at understanding language and context, making them ideal for complex tasks.

Transformers also have a big advantage over RNNs: they can process data in parallel. This makes training and using them much faster. This is crucial for large NLP models that need lots of computing power.

Now, the question is: are Transformers the next step after RNNs? While RNNs have been the standard for years, Transformers are changing the game. It’s possible that we’ll see a mix of both, making even more powerful models for many tasks.

The battle between RNNs and Transformers shows how fast deep learning is evolving. By knowing what each architecture does well, we can choose the best one for our needs. This helps us solve complex problems in deep learning.

Improving Recurrent Neural Networks using Memory Mechanisms

Recurrent Neural Networks (RNNs) have an important challenge: managing long-term dependencies and complex jobs. Researchers discovered strategies to improve RNNs through including memory mechanisms. These novel architectures make it less difficult for RNNs to maintain and recall essential information.

This makes RNNs better at solving complex problems. They can now handle tasks that need long-term thinking and decision-making.

Augmenting Recurrent Neural Networks for Complex Tasks

Neural Turing machines and differentiable neural computers are key to improving RNNs. They add the ability to remember and access information, just like our brains do. This makes RNNs better at dealing with long-term dependencies and complex tasks.

These advancements have also boosted deep learning. As we explore more, we’ll see even more creative solutions in artificial intelligence.