Table of Contents

Introduction

Think about a world in which machines are capable of developing and making judgments like surgeons. This is what Support Vector Machines (SVMs) do. They are a powerful machine learning tool that changes the way we categorise and predict things.

SVMs power complex AI systems such as facial recognition and spam filters. They are so significant that they account for around 40% of all machine learning models used today.

In this guide, we’ll look at Support Vector Machines in depth. We’ll look at the fundamentals, how they’re applied in practice, and the most recent improvements in this important machine learning tool.

Understanding Support Vector Machine Fundamentals

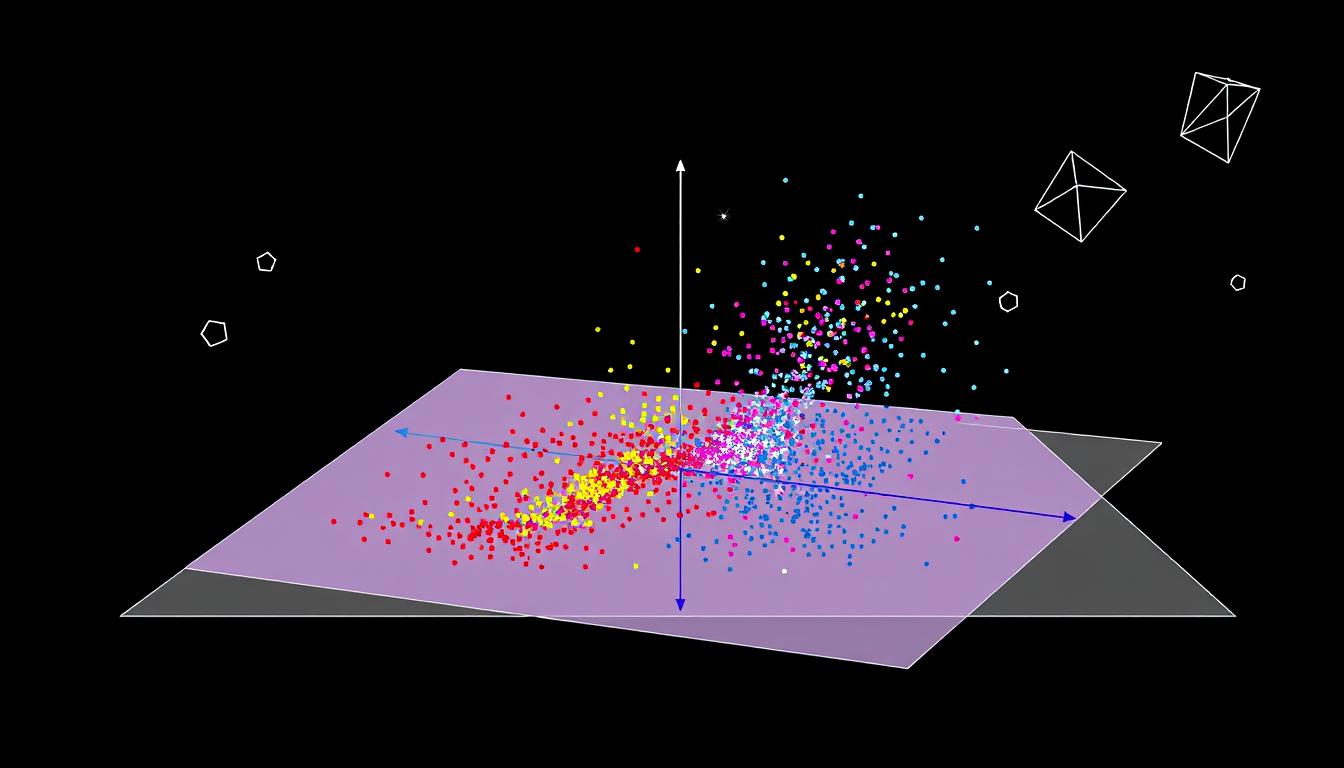

SVMs are a fundamental technique in machine learning. It supports classification and regression. SVMs try to find the best possible hyperplane to separate two classes and thus is very efficient for complicated problems. Now, let’s go through some basics and components that make these algorithms useful.

Basic Principles of SVM

The basic aim of SVMs is to find the best hyperplane for class separation. Hyperplane selection is made with a maximum margin. The distance to the nearest data points of classes on both sides is termed as margin. By such a mechanism, SVMs look towards structural risk minimization, thereby preventing overfitting and boosting generalization.

Core Components and Terminology

- Hyperplane: Decision boundary separating classes of data.

- Margin:Distance between the hyperplane and the nearest data points coming from each of the classes.

- Support Vectors those which lie closest to a particular hyperplane decide the position by themselves:.

- Kernel Function: A function that maps the input data into a higher space, thus allowing non-linear classification.

Mathematical Foundation

SVMs seek a hyperplane that maximizes the class margins. It is achieved using a quadratic programming problem. The objective is to minimize structural risk coupled with proper classification. The resulting hyperplane is defined by support vectors and weights. This helps you understand better how Support Vector Mach actually works.

Understanding these basics helps you appreciate how Support Vector Machines work. They are powerful tools for solving many classification problems in machine learning.

The Evolution of Support Vector Machines in Machine Learning

Support Vector Machines have been essential in many pattern recognition and classification algorithms that exist in machine learning. They began way back in the 1960s by Vladimir Vapnik and Alexey Chervonenkis. But it was during the 1990s that SVMs became highly used to solve tough problems in classifications.

SVMs primarily were two-class classification algorithms. They tried to classify between the best hyperplane dividing two classes. At the outset, machine learning was infant and had proved that other than binary, SVM can also solve problems.

This was a giant leap for SVMs. This was something that could make it possible for handling non-linear data by just mapping it into the higher space. It is the work of Bernhard Schölkopf and John Platt. It made it useful for most tasks, which led to its spread.

| Year | Milestone |

| 1960s | Fundamental principles of SVMs introduced by Vladimir Vapnik and Alexey Chervonenkis |

| 1990s | SVMs gain widespread recognition and adoption as a powerful classification algorithm |

| 1990s-2000s | Kernel trick and other advancements expand the capabilities of SVMs |

| Present Day | SVMs remain a widely-used and influential technique in the field of machine learning |

How Hyperplanes and Decision Boundaries Work

In the world of support vector machines (SVMs), hyperplanes and decision boundaries are key. They help SVMs sort data into different groups with great precision.

Optimal Hyperplane Selection

The main aim of an SVM is to find the optimal hyperplane. This hyperplane should best separate data points into their classes. It’s chosen to maximize the margin, or distance, to the nearest data points from each class.

Margin Maximization Techniques

- SVMs use quadratic programming to find the best hyperplane. This maximizes the margin between classes.

- This involves solving an optimization problem. The goal is to reduce the slack variables, which show misclassified points.

Support Vectors and Their Role

The points closest to the optimal hyperplane are called support vectors. They are vital in setting the decision boundary. These points are the most telling, guiding the SVM model’s training.

Understanding hyperplanes, decision boundaries, and support vectors reveals the beauty of SVMs. They are powerful tools for solving complex classification challenges.

Linear vs Non-linear Classification Methods

Support vector machines (SVMs) are key in machine learning for classification tasks. Traditional linear SVMs work well with data that can be separated by a line. But, they face challenges with data that isn’t so straightforward.

That’s where kernelized support vector machines come in. They use a kernel function to transform data into a new space. This feature mapping helps find complex patterns, even when data isn’t easily separated.

| Linear SVM | Kernelized SVM |

|---|---|

| Assumes data is linearly separable | Can handle non-linearly separable data |

| Finds a linear hyperplane to separate classes | Utilizes a kernel function to project data into a higher-dimensional feature space |

| Effective for simple, straightforward classification tasks | Suitable for more complex, non-linear classification problems |

Choosing between linear and kernelized SVMs depends on the problem’s complexity. Linear SVMs are quicker and simpler to understand. But, kernelized SVMs can tackle more complex problems, even if they’re harder to compute.

Knowing the differences between these methods is key. It helps pick the right SVM for your project.

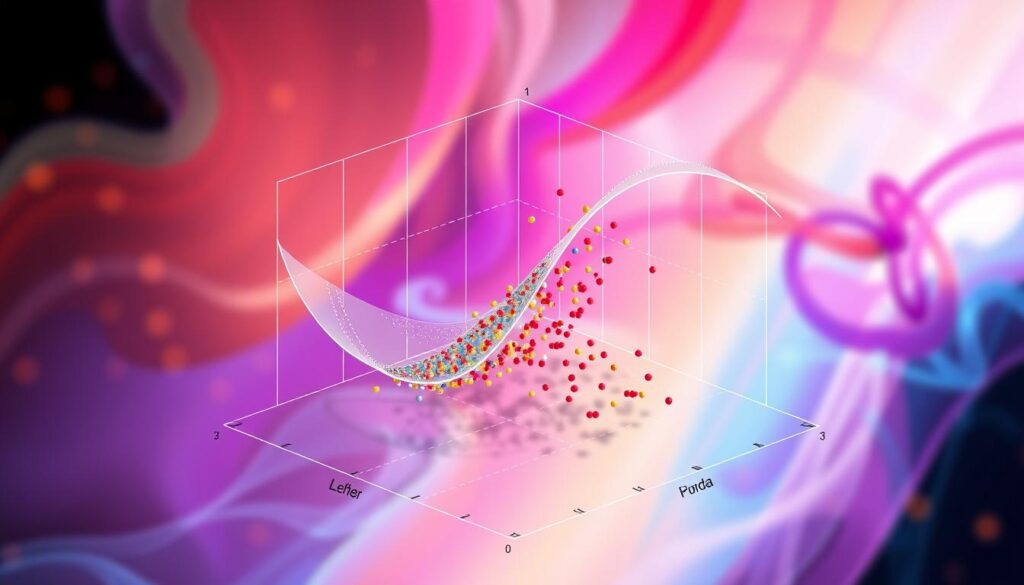

The Kernel Trick: Transforming Feature Spaces

Support Vector Machines (SVMs) are top-notch for both simple and complex tasks. The kernel trick is key to their success. It lets SVMs work with tough, non-linear data.

Common Kernel Functions

The kernel trick boosts data into a higher space for easier separation. There are several common kernel functions to choose from, like:

- Linear Kernel: The simplest, doing a basic linear change.

- Polynomial Kernel: Adds non-linear features with polynomials.

- Radial Basis Function (RBF) Kernel: Great for many non-linear patterns.

- Sigmoid Kernel: Similar to neural networks, it handles complex patterns well.

Selecting the Right Kernel

Picking the right kernel function is key for SVM success. It’s a mix of optimization techniques and trying different kernels to find the best fit for your data.

Feature Mapping Process

The feature mapping process is the heart of the kernel trick. It turns original features into a higher space for easier separation. This step is often quick, done without showing the exact transformed features.

The kernel trick is crucial for SVMs, making them great at solving complex problems. By picking the best kernel and optimizing the mapping, SVMs can perform well in many machine learning tasks.

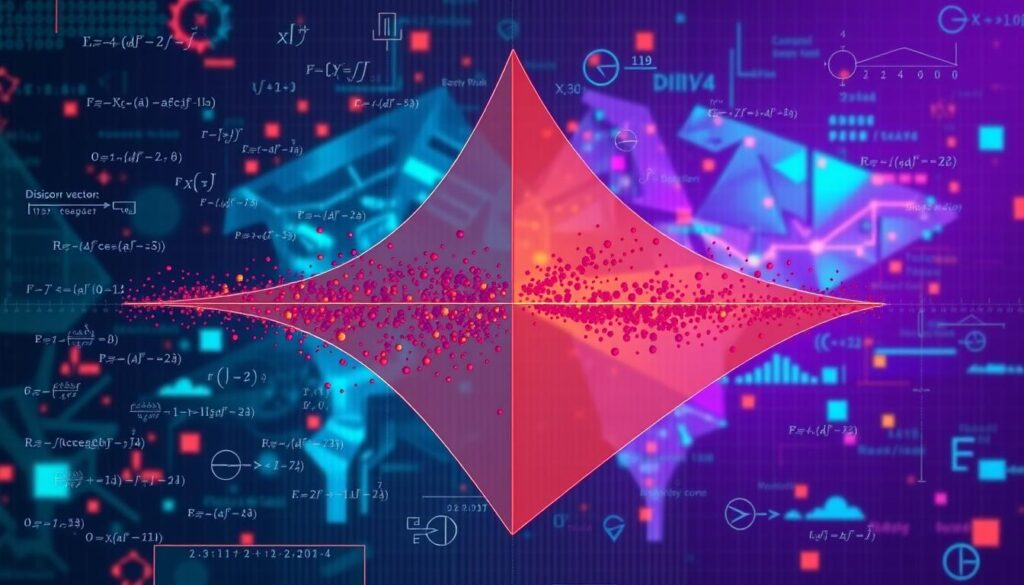

Preventing Overfitting Through Regularization

In the world of support vector machines classification, stopping overfitting is key for good models. Overfitting means a model does great on training data but fails on new data. This can make the model less useful.

In contrast, underfitting happens when a model is overly simplistic. It can’t catch the data’s patterns, leading to poor performance everywhere.

Regularization techniques are vital in SVMs to tackle these issues. Regularization adds a penalty to the model’s goal. This makes the model simpler and more general. It helps avoid overfitting and ensures the model works well on new data.

The C-parameter is a common regularization method in SVMs. It balances between a big margin and low training error. Adjusting the C-parameter helps find the right balance between overfitting and underfitting

| Regularization Technique | Description |

| C-parameter | Controls the trade-off between maximizing the margin and minimizing the classification error on the training data. |

| L1 Regularization (Lasso) | Encourages sparsity in the model, leading to feature selection and improved interpretability. |

| L2 Regularization (Ridge) | Penalizes the squared magnitude of the model’s coefficients, leading to a more stable and generalized model. |

Knowing and using the right regularization techniques is crucial. It helps your support vector machines classification models avoid overfitting and underfitting. This leads to accurate and reliable predictions.

SVM Applications in Real-world Scenarios

One of the main tools in machine learning is the support vector machine. This tool has been applied to real-life problems. Applications include text classification, natural language processing, image recognition, and bioinformatics. SVMs solve complex problems with a high degree of accuracy and speed.

Text Classification and NLP

SVMs are excellent tools for text classification and NLP. They can sort text into various categories like spam detection and sentiment analysis. Their skills in complex data handling make them the go-to for most text-based tasks.

Image Recognition Systems

Image recognition systems SVMs are the most commonly used algorithms for image recognition within computer vision. The use of kernels helps the algorithm to find complex patterns within images, which can be useful in detecting objects and faces. SVMs are widely used within many applications that are image-based, therefore showing their widespread applicability.

Bioinformatics Applications

The biological sciences is the field that merges biology with computer science. SVMs are applied very widely here, for protein prediction and disease diagnosis. They handle complex data well; hence their importance in bioinformatics is paramount. SVMs are really versatile; they contain the key for machine learning. With such a growth in AI and new challenges in various departments, SVMs will significantly contribute to these emerging roles.

Implementing Support Vector Machine Models

Machine learning is crucial in learning to use support vector machines. It does not matter if you are using MATLAB or another library; knowing how support vector machines work can make all the difference.

SVMs are great for both simple and complex tasks. By choosing the right kernel function, you can make your data easier to understand. This unlocks the full power of how support vector machines work.

- First, get to know popular SVM libraries like scikit-learn in Python or fitcsvm in MATLAB.

- Learn how important data prep, feature engineering, and tuning are for better SVM models.

- Try out different kernel functions like linear, polynomial, or RBF to find the best fit for your problem.

- Use cross-validation to pick the best model and make sure it works well on new data.

- Look into advanced SVM topics like multi-class classification and one-vs-rest methods to improve your models.

By getting good at support vector machines, you can solve many machine learning problems. This includes text classification, image recognition, and bioinformatics applications. Dive into SVMs and unlock their full potential.

Advanced SVM Techniques and Optimization

SVMs (Support Vector Machines) are getting better, thanks to new techniques. These improvements focus on handling complex tasks. Key areas include multi-class classification and fine-tuning parameters.

Multi-class Classification

SVMs were first for binary classification. But, the need for multi-class tasks led to new methods. These include:

- One-vs-Rest (OvR): Training multiple binary classifiers, each distinguishing one class from the rest.

- One-vs-One (OvO): Constructing binary classifiers for every pair of classes and combining their outputs.

- Directed Acyclic Graph SVM (DAG-SVM): Organizing binary classifiers in a hierarchical structure to make multi-class decisions.

Parameter Tuning Strategies

Improving SVM models means tweaking parameters like the penalty parameter (C) and the kernel function. Good tuning methods are:

- Grid Search: It systematically tests all values of parameters such that some optimal combination is found.

- Random Search: Sampling the parameter space randomly by using random values of parameters from some predefined distribution.

- Bayesian Optimization: Search for the optimal parameters using a probabilistic model rather than brute force, which reduces the number of evaluations required..

Application for fine-tuning using improved SVM techniques and optimization by experts solves complex problems or achieves the best result using some real-world applications.

| Technique | Description |

|---|---|

| One-vs-Rest (OvR) | Training multiple binary classifiers, each distinguishing one class from the rest |

| One-vs-One (OvO) | Constructing binary classifiers for every pair of classes and combining their outputs |

| Directed Acyclic Graph SVM (DAG-SVM) | Organizing binary classifiers in a hierarchical structure to make multi-class decisions |

| Grid Search | Systematically evaluating a range of parameter values to find the optimal combination |

| Random Search | Randomly sampling parameter values from a specified distribution to explore the parameter space |

| Bayesian Optimization | Using a probabilistic model to guide the search for optimal parameters, reducing the number of evaluations required |

Comparing SVM with Other Machine Learning Algorithms

One very strong machine learning tool would include Support Vector Machines. One can easily use SVM in various classification and regression scenarios. It has some nice advantages over other algorithms for solving these problems.

Unlike decision trees or random forests, SVMs handle high-dimensional data well. They can also model complex, non-linear relationships effectively.

While deep learning algorithms might need a lot of data, SVMs can perform well with less. They are also less likely to overfit, which is important for tasks like image recognition or bioinformatics.

But, SVMs have their downsides. They might not be the best for all regression tasks. For those, linear regression or gradient boosting could be better. Choosing the right kernel function and tuning hyperparameters can also be tricky.