Table of Contents

Introduction

Over 70% of data scientists love the K-Nearest Neighbor algorithm, did you know this? It is an important tool in finding patterns within data. This post should clarify how it works, the math behind why it does what I just mentioned and ways you can use them.

KNN is the simplest and one of the most powerful machine learning algorithms. It determines the K-nearest data points of a new input. This assists in generating correct predictions or classifications. Data scientist or data analyst, beginner in Machine learning and professional K-Nearest Neighbour always a best choice to solve problems.

Basics of KNN and Advanced uses It might just change the way we approach problem-solving in health-care, finance and many other domains around us. Introducing theK-Nearest Neighbour Algorithm — A True Machine Learning Powerhouse

Understanding the Fundamentals of KNN Algorithm

K-Nearest Neighbour is a form of instance-based learning, or lazy learning where the function is only approximated locally and all computation is deferred until data points are requested. It is used for Classification and Regression tasks. Its mechanism is to look inward of how near the data points are apart from each other.

Foundation Concepts and Terminologies

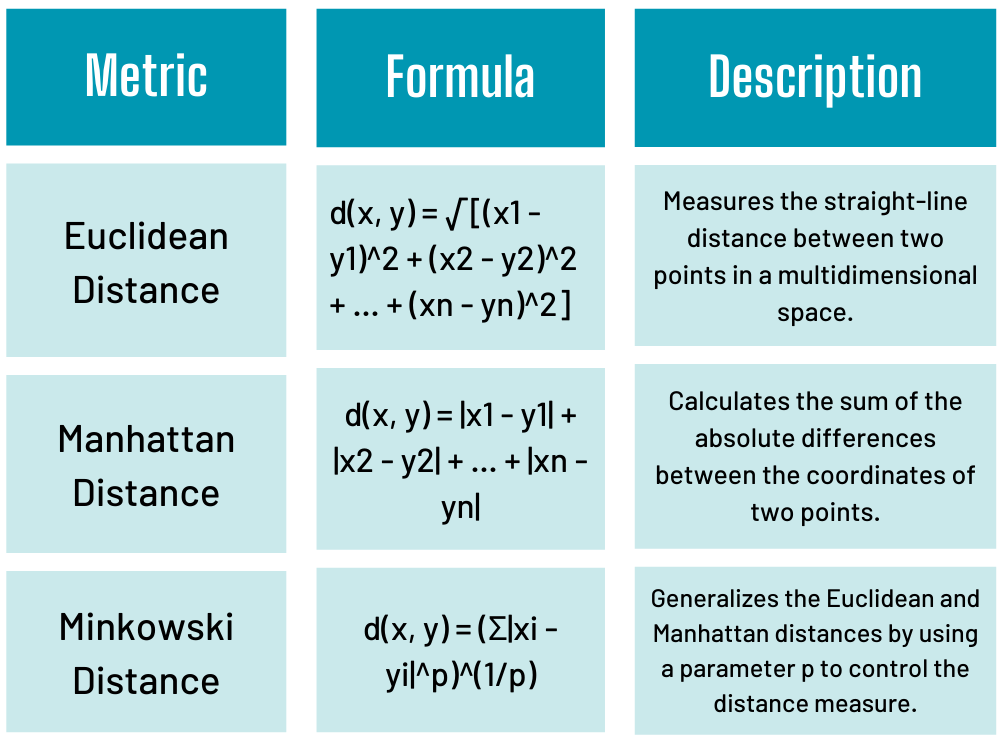

K-Nearest Neighbour is a lazy learner algorithm which finds the k-nearest neighbors of data and based on majority vote decision has been made for new given unknown instance points. A distance metric such as Euclidean or Manhattan is used to measure the closeness. However, the value of K (the number of neighbors) is crucial to determine how good our algorithm will perform.

Core Components of KNN

The KNN algorithm has a few main parts:

- Distance metric: This is how it measures closeness between points, like Euclidean or Manhattan.

- Neighbor selection: It finds the K closest neighbors to a point.

- Decision making: It uses the neighbors’ features to make predictions or classifications.

Historical Development and Evolution

The nearest neighbors algorithm started in the 1950s. It was first used for pattern recognition. Now, it’s used in many areas, like image recognition and text classification.

KNN’s simplicity and flexibility make it a favorite among data scientists. It’s still growing in the field of machine learning

The Mathematical Framework Behind KNN

The KNN algorithm is based on some solid math. That is the mathematical foundation, which enables it to predict values about data and classify items. Let’s examine the critical parts of KNN-that are distance metrics and decision boundaries.

Euclidean Distance is yet another important metric of K-Nearest Neighbour. It computes the distance between two points in space. This is how KNN goes about finding the nearest k neighbors, a very crucial step in its technique of classification. In KNN, for supervised learning, closeness of data points is used for the prediction. It involves a decision boundary defined based on the class labels of the k nearest neighbors. Hence, its ability to predict the class of a new point is based on the vote of its closest neighbors.

How KNN Works in Pattern Recognition

KNN Algorithm: The k-nearest neighbour algorithm is the heart of many machine learning tools. It’s great at recognizing patterns. It uses closeness to guess and sort data points. Knowing how K-Nearest Neighbourworks helps us see its uses and strengths.

Distance Metrics and Calculations

The first step in KNN is picking a distance metric. This metric shows how similar data points are. Common ones are Euclidean, Manhattan, and Cosine similarity. The right one depends on the data and the problem.

Neighbor Selection Process

After choosing a metric, K-Nearest Neighbour finds the k closest neighbors to a point. It calculates distances to all points and picks the k closest. The k value greatly affects the algorithm’s success.

Decision Making Mechanisms

The last step is making a decision. KNN uses the k nearest neighbors to guess or classify a point. For classification, it goes with the most common class among the k neighbors. For regression, it averages the values of the k neighbors.

Understanding KNN’s parts helps us see its wide range of uses. It’s useful in many areas, like image recognition and customer grouping.

KNN as a Supervised Learning Method

K-Nearest Neighbour is definitely one of the most important algorithms in supervised learning under machine learning. It uses labeled data for training and predictions. This way, it learns from examples and classifies new data points well.

KNN’s core is about finding the closest neighbors to a data point. It uses their labels to guess the class of the new instance. This method is great for tasks like image recognition and medical diagnosis.

KNN is simple and easy to understand. It makes predictions by using the knowledge of its nearest neighbors. This simplicity makes it a popular choice for many machine learning tasks.

Even though KNN is not the most complex algorithm, it’s very useful. It works well with different problems and can handle noisy data. We’ll look into how K-Nearest Neighbour achieves accurate results in the next sections.

In short, KNN’s supervised learning approach makes it unique. It uses labeled data for classification and prediction. Its simplicity, flexibility, and effectiveness make it a top choice for many machine learning tasks.

Implementing KNN for Classification Tasks

The K-Nearest Neighbors algorithm is great for many machine learning and data mining applications. Here’s how to use it for classification. I’ll provide code examples and some hints on how to make it work better.

Step-by-Step Implementation Guide

In classifying, one can apply K-Nearest Neighbour as follows:

- Prepare your data and ensure that it meets the requirements of KNN algorithm.

- Use a distance metric, such as Euclidean or Manhattan, to calculate similarities.

- Choose the appropriate K, which is how many neighbors to sample.

- Use the KNN algorithm to find the closest neighbors and decide on a class.

- Test out the KNN classifier on some new data; fine-tune from there.

Code Examples and Best Practices

- Normalization: All features in your dataset should be normalized so that no feature is biased.

- Choose the appropriate value of K: Pick K based on your problem’s complexity and dataset size.

- Apply cross-validation: Finally, use cross-validation to validate your model’s performance, so it not overfits.

- Handling the issue of imbalanced datasets: Oversampling or undersampling to enhance performance on imbalanced datasets.

Performance Optimization Tips

To make your KNN better, try the following:

- Feature selection: Select for your task the features most relevant for improving accuracy.

- Dimensionality reduction: Use PCA or t-SNE to make your data smaller and faster to process.

- Parallelization: Use frameworks like Dask or Spark to speed up distance calculations and classification.

- Algorithm optimization: Explore more sophisticated K-Nearest Neighbour approaches such as ball trees or k-d trees where needed to improve efficiency.

Some guidelines, examples, and tips would allow you to use K-Nearest Neighbour effectively in any of your projects.

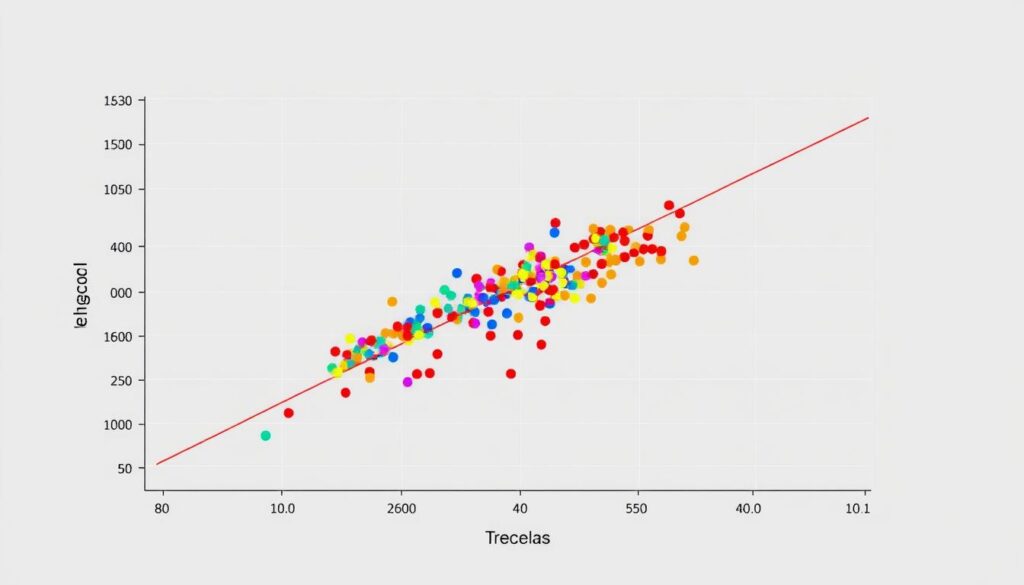

KNN in Regression Analysis

though K-Nearest Neighbour is known more for classification, it has also played its part very well with regression. Let’s see how KNN adapts to such tasks and how it works in comparison with other methods.

In regression, K-Nearest Neighbour predicts continuous values, unlike classification where it predicts discrete labels. It finds the k nearest neighbors and uses their values to estimate the new data point’s output.

ForK-Nearest Neighbour regression, we follow a similar process as classification but with some tweaks. Instead of voting, we average the target values of the k nearest neighbors. This method is effective for continuous regression problems.

KNN regression is great for complex, non-linear data. It doesn’t assume a linear relationship like linear regression does. This makes it good at finding intricate patterns in data.

| Regression Technique | Strengths | Limitations |

|---|---|---|

| Linear Regression | Simple, easy to interpret, and computationally efficient | Assumes a linear relationship between features and target |

| KNN Regression | Flexible in modeling non-linear relationships, can capture complex patterns | Computationally intensive, sensitive to the choice of k and distance metric |

| Decision Tree Regression | Intuitive, can handle both linear and non-linear relationships | Prone to overfitting, can be less accurate than other methods |

Advantages and Limitations of KNN

KNN actually has its merits and demerits. This is for both machine learning and pattern recognition. Most importantly, knowing the pros and cons can help make a good choice about which algorithm to use for what task.

Strengths in Different Applications

It is very simple and straightforward to use. This algorithm does not need to know the distribution of the data beforehand and can thus be used as a non-parametric technique for any kind of machine learning task, such as

- Image classification

- Pattern recognition in data-driven fields

- Recommendation systems

- Anomaly detection

Common Challenges and Solutions

But KNN has its downsides. One big challenge is the curse of dimensionality, where it struggles with more features. To fix this, you can use dimensionality reduction or feature selection to improve the classification technique.

Another issue is finding the right ‘k’ value (the number of nearest neighbors). Finding the best ‘k’ can take some trial and error. Cross-validation can help find the optimal ‘k’ value.

Performance Comparison with Other Algorithms

Compared to other algorithms, KNN might not always be the best choice, especially with big, complex data. But, K-Nearest Neighbour is great when you need something simple and easy to understand.

In summary, KNN is a flexible algorithm with many uses. But, its performance should be carefully checked against the specific needs of the problem.

Real-World Applications and Use Cases

There are other KNN applications besides the previous ones in the applied science sector, even in health fields. It is able to diagnose diseases, predict the patient outcome, and establish risk factors. It, for instance, assists doctors in identifying other types of cancer, thus guiding the same doctors on making more sound decisions.

K-Nearest Neighbour is applied in finance to credit risk assessment, fraud detection, and stock price prediction. Financial institutions are therefore making better choices and reducing risks by basing their decisions on KNN knowledge. This is because of its proficiency in pattern recognition.

In natural language processing (NLP), K-Nearest Neighbour is used for analyzing sentiment, classifying text, and translating languages. It looks at the similarity of text data to sort documents, emails, and social media posts. This is very useful for businesses to understand what customers think and improve their marketing.