Table of Contents

Introduction

In today’s world, machine learning (ML) projects need more than just accurate models. They also need efficient and reliable pipelines. That’s where MLOps comes in. It’s a game-changer that merges machine learning operations with your software development lifecycle.

But what is MLOps, and how can it change your ML application management? Let’s explore.

Ready to boost your machine learning models and make your ML pipeline smoother? Our MLOps guide is here to help. You’ll learn about the key components, benefits, and best practices to elevate your ML projects.

Understanding the Fundamentals of MLOps

the MLOps (Machine Learning Operations) are critical to making sure that your ML pipeline runs smoothly. It mixes Machine Learning, Software Engineering, and DevOps. The combination above automates every step in the life cycle of an ML model.

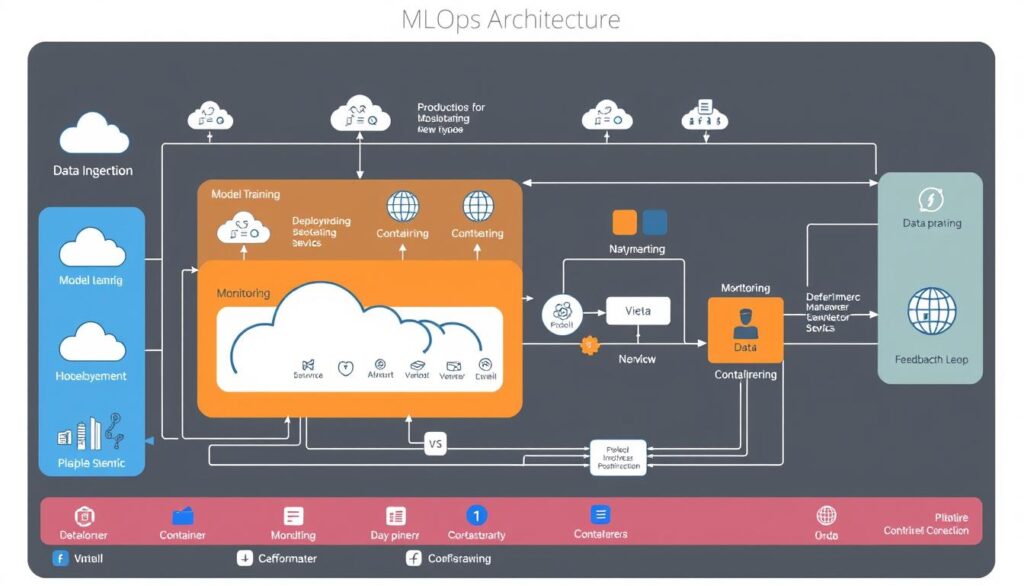

Core Components of MLOps Architecture

The MLOps architecture has three main parts: data management, model training, and deployment. Data teams handle data collection, prep, and versioning. They make sure the data is good for training models.

Model training involves trying out different algorithms and tweaking settings. This makes the model better. The deployment stage puts the model into action, watches its performance, and updates it when needed.

Key Benefits of MLOps Implementation

Using MLOps brings big advantages. It speeds up getting new ML products or features to market. It also makes models better by watching and updating them automatically. Plus, it helps data scientists and software engineers work better together.

By automating the ML process, MLOps makes the whole ML lifecycle smoother. It ensures ML workflow automation fits well with your MLOps architecture.

MLOps vs Traditional DevOps

Traditional DevOps deals with software development. But MLOps tackles the special needs of machine learning. ML models need constant checks, updates, and deployment, which is different from regular software.

MLOps uses tools and methods made for ML DevOps. This includes managing model versions, tracking experiments, and running tests automatically.

Building Strong Data Pipelines for Machine Learning

Strong data pipelines are critical in machine learning (ML). They ensure data quality, consistency, and reproducibility when training and verifying ML models.

Data versioning is crucial in building effective pipelines. It allows you to track changes and revert to previous versions if needed. Tools like Git LFS and DVC help manage data versioning and keep a detailed history of your dataset.

Data preprocessing and feature engineering are also vital. A standardized process for cleaning, transforming, and extracting features is essential. This includes techniques like missing value imputation and categorical feature encoding.

| Technique | Description | Example Tools |

|---|---|---|

| Data Versioning | Tracking changes and reverting to previous versions of your dataset | Git LFS, DVC |

| Data Preprocessing | Cleaning, transforming, and engineering features from raw data | Pandas, Scikit-learn, Spark |

| Reproducible ML | Ensuring consistent and repeatable model training and deployment | MLflow, Kubeflow, Airflow |

Model Development and Version Control Best Practices

In the world of model management and model lifecycle management, keeping a strong version control is key. Using Git in your ML infrastructure makes your machine learning projects run smoother. It also helps your team work together better.

Git Integration for ML Projects

Git is a top choice for managing code in machine learning. It helps you track changes, work on code together, and keep a detailed history of your model’s development. This lets your team try new things, go back to old versions if needed, and make sure their models work the same way every time.

Managing Model Artifacts

Managing your model’s files is just as important as the code. You need to keep track of your trained models, settings, and other important files. Storing these in one place makes it easy for your team to find and use what they need for deploying, watching, and updating models.

Code Repository Organization

- Make a clear plan for your ML project’s folders, keeping code, data, and model files separate.

- Use the same names for files and folders to make your project easier to understand and navigate.

- Use branch management to help your team work together and keep the code clean.

Following these model development and version control tips will make your ML infrastructure better. This will help your machine learning projects succeed in the long run.

Continuous Integration for Machine Learning Projects

The domain of machine learning (ML) is expanding quickly. This means that we need improved methods for developing and deploying models. Continuous integration (CI) is critical in the MLOps environment. It facilitates the smooth integration of machine learning models into our development pipelines.

CI makes testing and validating ML models easier. By adding ML models to a CI/CD pipeline, we can check their performance before they go live. This ensures they meet the standards needed for production.

CI also helps with managing model versions and artifacts. It works well with tools like Docker and Kubernetes. This makes our continuous integration for ML engineering more scalable and reliable.

| Feature | Benefit |

|---|---|

| Automated Model Testing | Ensures model quality and performance before deployment |

| Versioning and Artifact Management | Provides a centralized repository for model checkpoints and artifacts |

| Integration with CI/CD Pipelines | Seamlessly incorporates ML models into existing software development workflows |

| Containerization and Orchestration | Enables scalable and reliable model deployment and management |

Model Deployment Strategies and Best Practices

In the world of MLOps, deploying models is key to success. Whether you’re moving a model to production or scaling your ML setup, knowing the strategies and best practices is crucial.

Containerization with Docker

Containerization with Docker is a top choice for deploying models. Docker wraps your model, its needs, and the environment into one container. This makes deployment easier and keeps things consistent from start to finish.

Kubernetes Orchestration

After containerizing your model deployment, use Kubernetes for orchestration. Kubernetes makes your containers scalable and always available. It helps manage your models’ life cycle, scaling, and availability.

Scaling Considerations

As your models and demand grow, scaling your ML deployment is vital. You might need more resources or autoscaling to meet needs. This ensures your models run smoothly and efficiently.

By sticking to these best practices, you’ll deliver your models reliably and efficiently. This boosts your MLOps success.

Read More

- GANs Unleashed: Revolutionizing AI with Powerful Generative Models in 2024

- Supervised Learning in 2024: The Ultimate Game-Changer for Machine Intelligence

- Unsupervised Learning: AI’s Bold Journey of Self-Discovery in 2024

- Navigating Hugging Face: The Thrilling Breakthrough in AI for 2024

- Why Convolutional Neural Networks Are the Backbone of Modern AI Imaging in 2024

Automated Testing and Quality Assurance

Keep ML models in proper form to continue in a fast-changing and operation-dominated landscape of ML. Thus, automated testing and quality control are even more important because, in many ways, the ML cycle is greatly based on automated testing.

Automated testing is vital for keeping your ML pipelines reliable and well-governed. With strict test suites, you can check your models’ health, spot problems, and ensure they work the same everywhere.

- Unit tests: Check if each part of your ML models works right.

- Integration tests: Make sure different parts of your ML pipeline work together smoothly.

- End-to-end tests: Test the whole ML process, from getting data to making predictions, to see if everything works as it should.

Quality assurance in MLOps is more than just testing. It’s about setting strong ML governance rules to keep your models sound, clear, and accountable from start to finish. This includes:

- Creating clear rules for model versions and tracking them

- Setting up model checks and approval steps

- Keeping detailed model notes and history

By using automated testing and solid quality assurance, you make your ML models more reliable, reproducible, and well-governed. This leads to more successful and trustworthy uses of your models.

| Automated Testing Techniques | Benefits |

|---|---|

| Unit Tests | Validate individual model components |

| Integration Tests | Ensure seamless integration of pipeline modules |

| End-to-End Tests | Simulate the entire ML workflow |

Model Monitoring and Performance Tracking

In the world of MLOps, keeping an eye on your models is important. With proper tracking, you can check how your models perform in actual time. This allows you to identify problems and keep your models functioning properly.

Real-Time Monitoring Tools

Using tools for real-time monitoring is critical. They provide a complete analysis of your model’s performance. This manner, you can rapidly identify and resolve any issues. Tools like as Prometheus, Grafana, and Datadog are ideal for this, as they provide customized dashboards and notifications.

Detecting Model Drift

Model drift happens when a model’s performance slowly gets worse. By watching your model’s behavior, you can catch drift early. This lets you update your model before it’s too late. A/B testing and canary deployments are good ways to handle this.

Performance Metric Analysis

Analyzing your model’s performance data is critical. Metrics like accuracy and F1-score indicate how effectively your model is doing. Regularly verifying these can help you enhance your model monitoring and performance tracking.

By focusing on model monitoring and performance tracking, your models will keep working well. This keeps you competitive in the fast-changing world of MLOps.

Conclusion

Throughout this guide, we’ve seen why MLOps best practices are key. They help make machine learning pipelines smoother and AI deployments more successful. By using strong data pipelines and smart model deployment, companies can get the most out of their ML efforts. This ensures their models work well and bring real value to the business.

Tools like Docker and Kubernetes are essential for managing the model lifecycle. They help teams handle everything from training to keeping models up to date. By following these MLOps best practices, teams can make their ML pipelines better. This means they can innovate faster and stay ahead in the tech world.

MLOps’ future seems promising, with more development and innovation on the way. As businesses require more scalable and trustworthy machine learning solutions, new tools and approaches will emerge. Businesses that keep up with these changes can thrive in an AI-driven environment.